A Decade of AI Evolution: From RNNs to LLMs

Ten years ago, Andrej Karpathy’s blog post, The Unreasonable Effectiveness of Recurrent Neural Networks, introduced a deceptively simple idea: recurrent neural networks (RNNs) could generate sequences—tokens to tokens—in a surprisingly effective way. Published on May 21, 2015, the post (available at karpathy.github.io/2015/05/21/rnn-effectiveness/) showcased RNNs generating everything from Shakespearean text to Linux code, all through a straightforward process of predicting the next token based on previous ones. It was a moment of clarity in the early days of deep learning, revealing the raw potential of sequence modeling.

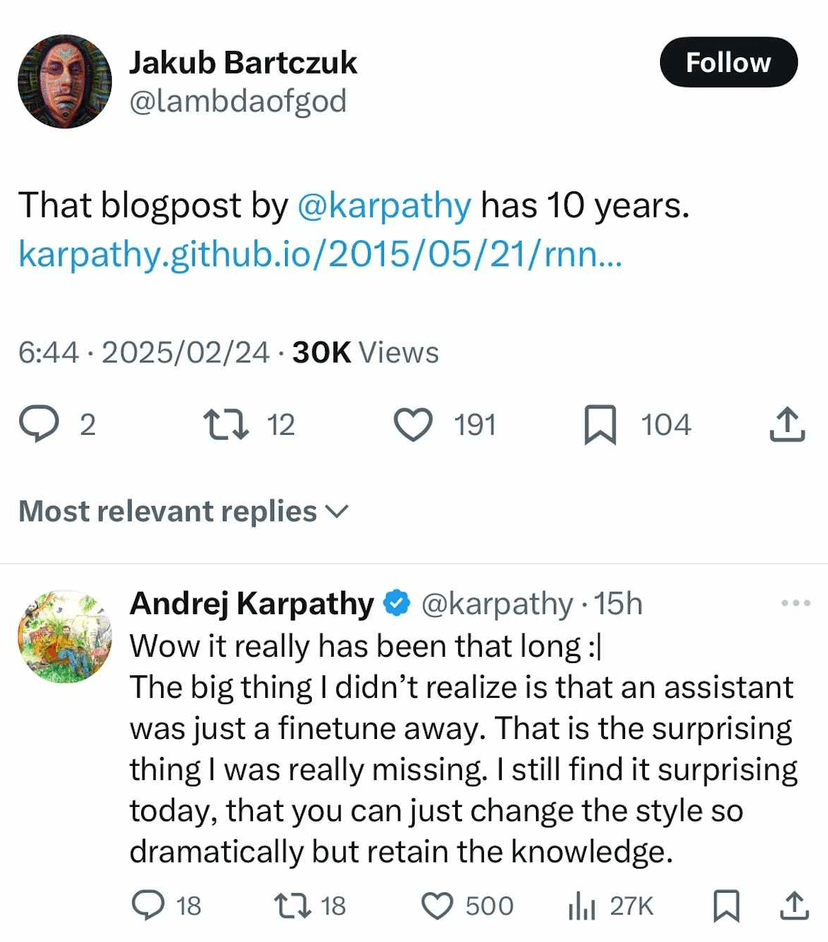

Fast forward to 2025, and the landscape has transformed dramatically. Karpathy’s recent reflection on Twitter—shared on February 24, 2025—offers a poignant perspective: “The big thing I didn’t realize is that an assistant was just a fine-tune away. That is the surprising thing I was really missing. I still find it surprising today, that you can just change the style so dramatically but retain the knowledge.” This insight captures the leap from RNNs to today’s large language models (LLMs), driven by two key innovations: the transformer architecture and the power of fine-tuning.

The Transformer Revolution

The introduction of transformers, first detailed in the 2017 paper Attention Is All You Need, marked a seismic shift. Unlike RNNs, which process sequences step-by-step and struggle with long-term dependencies, transformers leverage parallel processing and self-attention mechanisms. This allowed for an explosion in compute efficiency and model scale, enabling LLMs like GPT, LLaMA, and others to handle vast datasets and generate coherent, context-aware text at unprecedented speeds. The simplicity of Karpathy’s RNN token generation has evolved into a complex, compute-intensive powerhouse—but the core idea of token-to-token prediction remains.

Fine-Tuning: Style Without Losing Knowledge

What’s truly remarkable, as Karpathy notes, is fine-tuning. Modern LLMs start with a massive, general-knowledge base trained on trillions of tokens. Then, with relatively small, targeted datasets, they can be fine-tuned to adopt specific styles—be it a chatty assistant, a technical writer, or even a poetic voice—while retaining their broad knowledge. This flexibility is what makes LLMs so versatile and surprising. A decade ago, achieving such stylistic control would have seemed unimaginable with RNNs, which were limited by their sequential nature and lack of scalability.

Reflections on Progress

Reading Karpathy’s 2015 post today feels like peering into a time capsule. The RNNs he described were elegant in their simplicity but constrained by the technology of the time. The explosion of compute, the transformer architecture, and the art of fine-tuning have unlocked a new era of AI, where models can not only generate text but also adapt their tone and style with precision. It’s a testament to how far we’ve come—and a reminder of the foundational ideas that sparked this revolution.

But what we learned here?

Basically nothing fundamentally changed at all. It's just that we have found better ways to do it. The simplicity of RNNs was a starting point, and the complexity of LLMs is the destination. But the journey has been one of evolution, not revolution. The core idea of understanding sequences, predicting tokens, and generating text remains the same. It's the scale, the speed, and the style that have transformed beyond recognition.

Simply put, I believe the way towards AGI or ASI remains mysterious, and fundamental breakthroughs are yet to come.